What is Azure Cognitive Search?

Azure Cognitive Search is a PaaS solution in Azure that allows you to integrate sophisticated search capabilities into user applications. Azure Cognitive Search platform enables faster ingestion, enrichment, and exploration of structured and unstructured data, and you can integrate Azure Cognitive Search into mobile apps, e-commerce sites, and other business apps. Azure Cognitive Search brings together some of the best work for Microsoft across search and AI and makes it available to everyone. Microsoft developed some advanced features for Azure Cognitive Search, and the data ingestion can consider as the initial stage of it. Data can either pull in automatically from an Azure data source or push any data requires to the search index using the push API. These data contents are not uniform, and they exist in different formats such as records, long texts, or pictures. Cognitive search can extract and index information from any format and applies machine learning techniques to understand the latent structure in all data. For example, it can extract key phrases, tag images, the tech language, locations, and organization names. The combination of cognitive search with cognitive services enables search to understand the content of all nature.

The sophistication of data ingestion, the smarts to understand, and index content with keyword search are available for a while, and they also offer some key advantages. Microsoft moves into the next step of this journey with the new semantic search capabilities that include semantic relevance, captions, and answers.

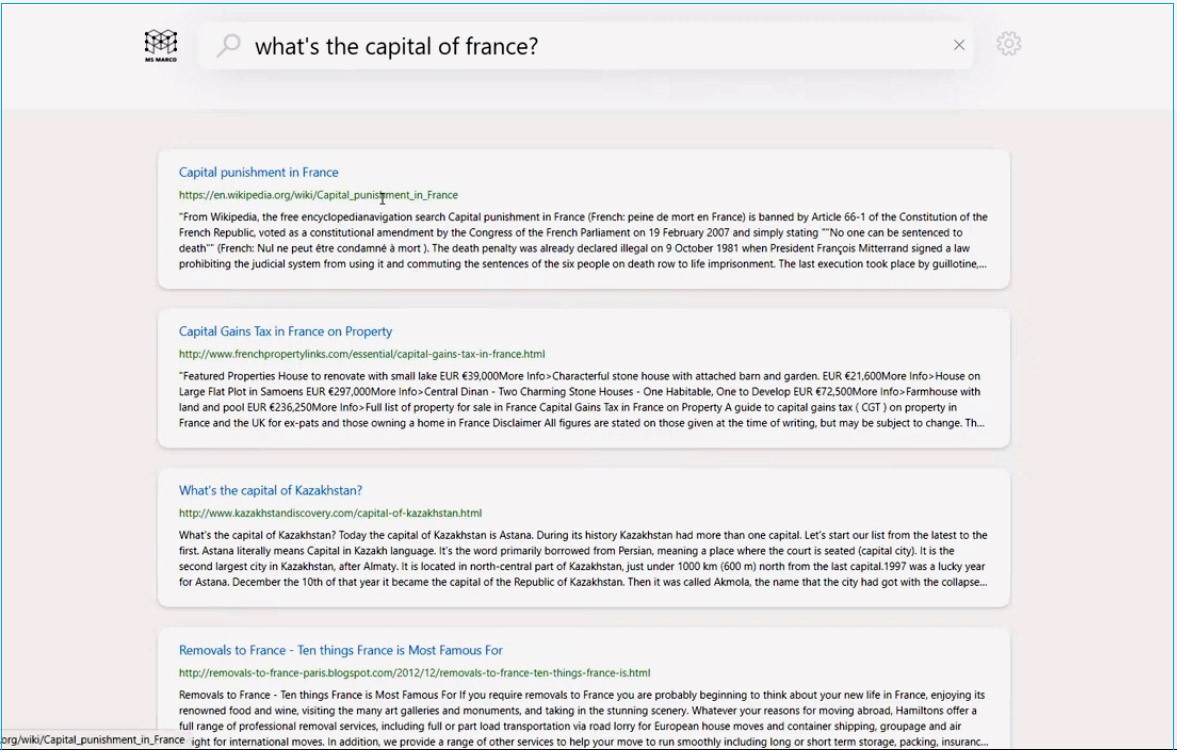

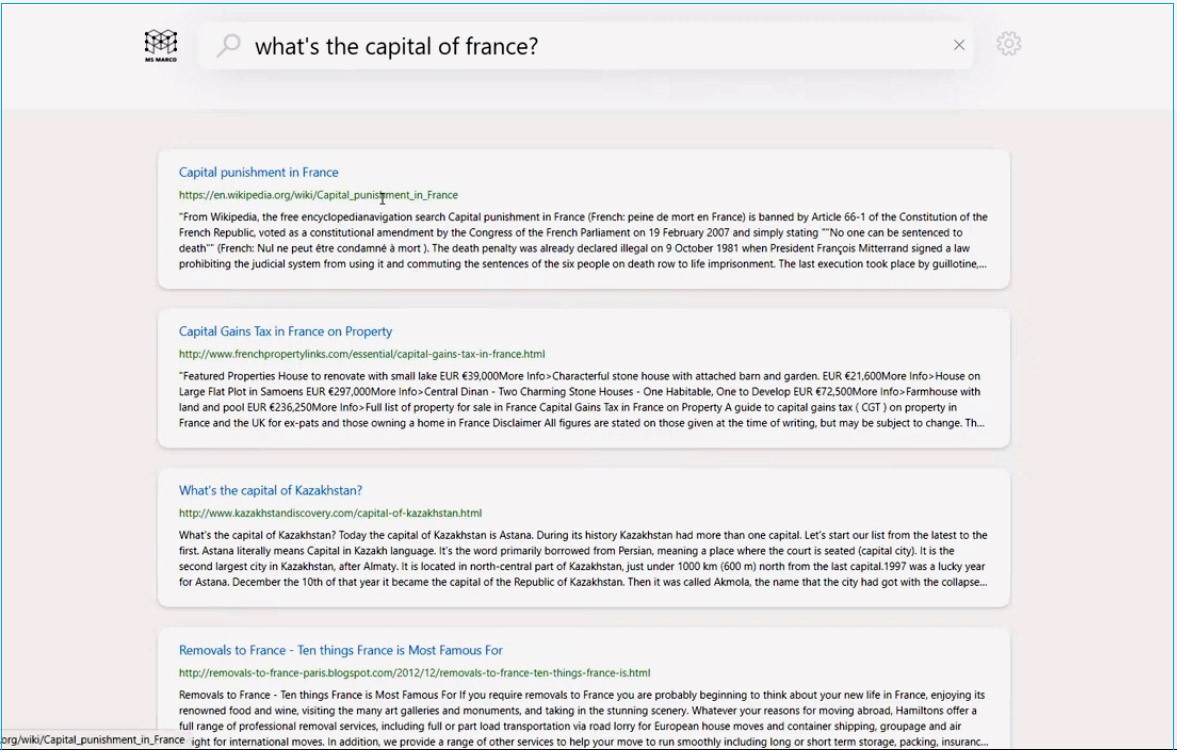

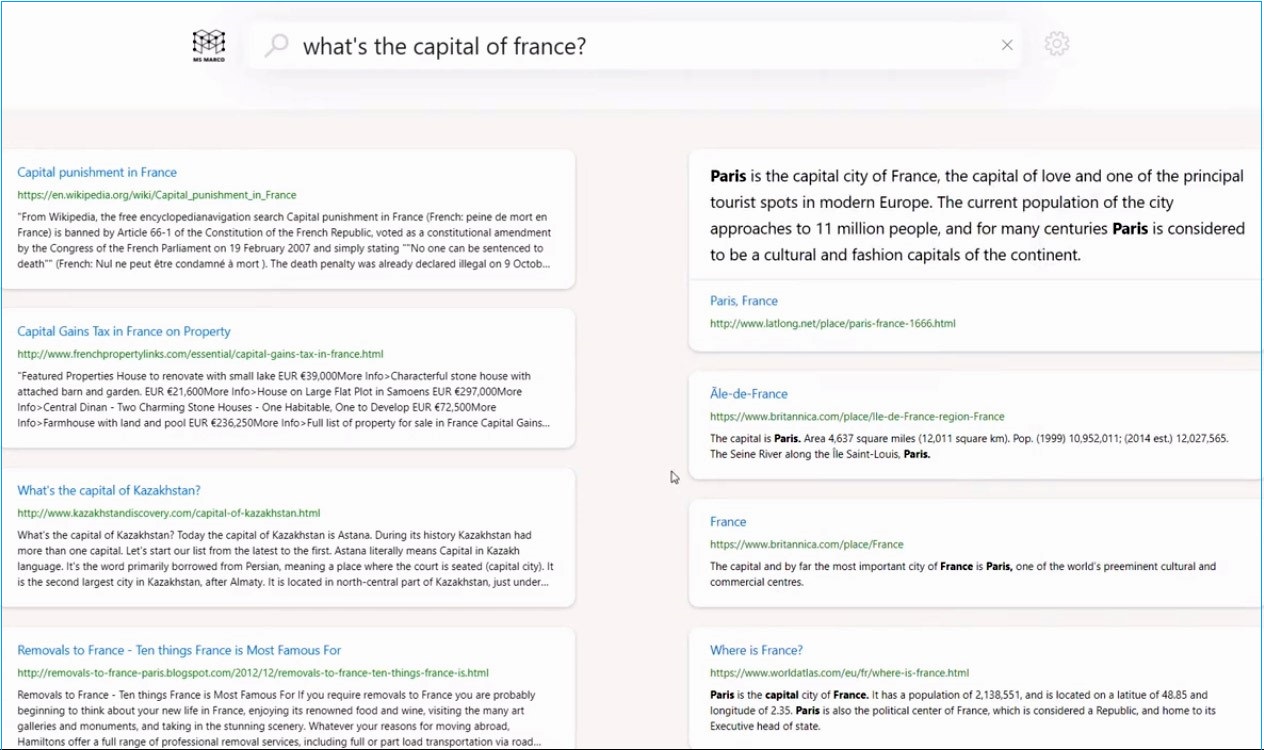

To demonstrate this, we can use a demo application fronting a cognitive search index with a dataset often used for evaluation purposes called MS Marco. Let’s search for what’s the capital of France. The results will match the keywords in our search, but it looks like the ambiguity of the word capital, particularly, caused top results to be a bit all over the place. You can see capital punishment, capital gains, the capital of Kazakhstan, removals to France.

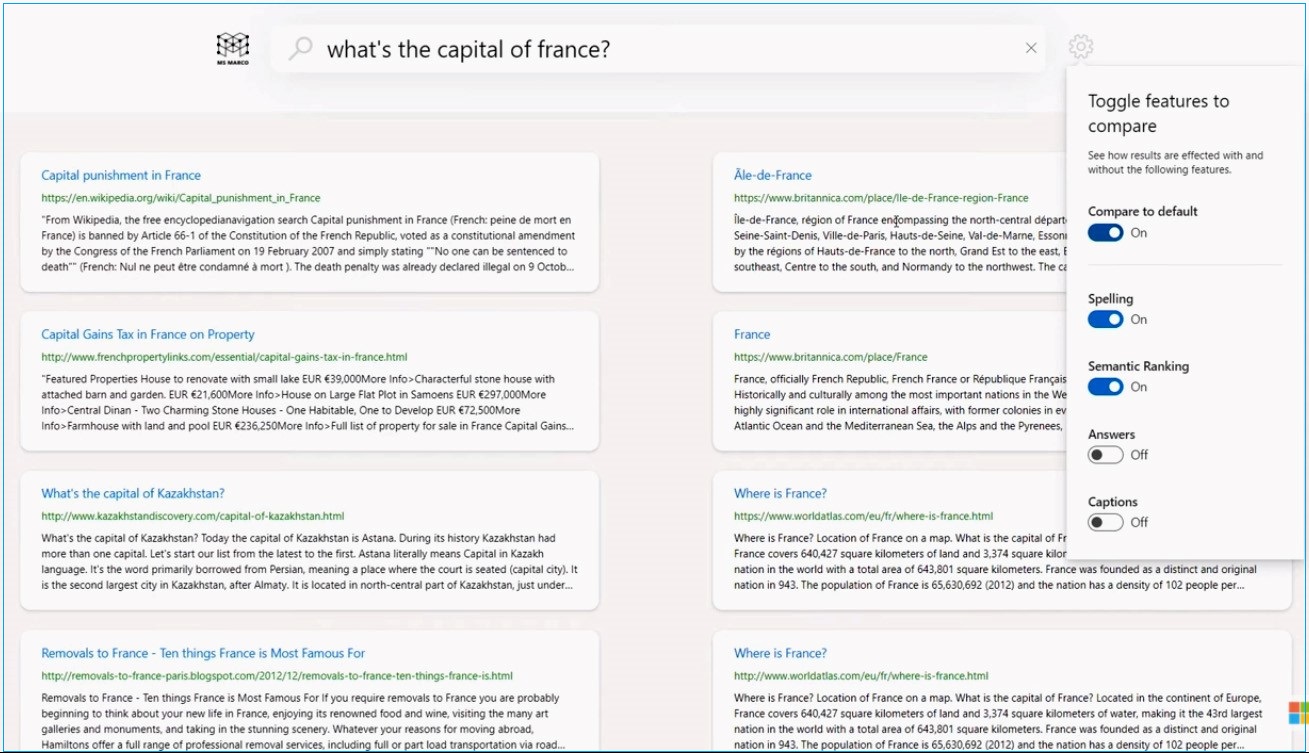

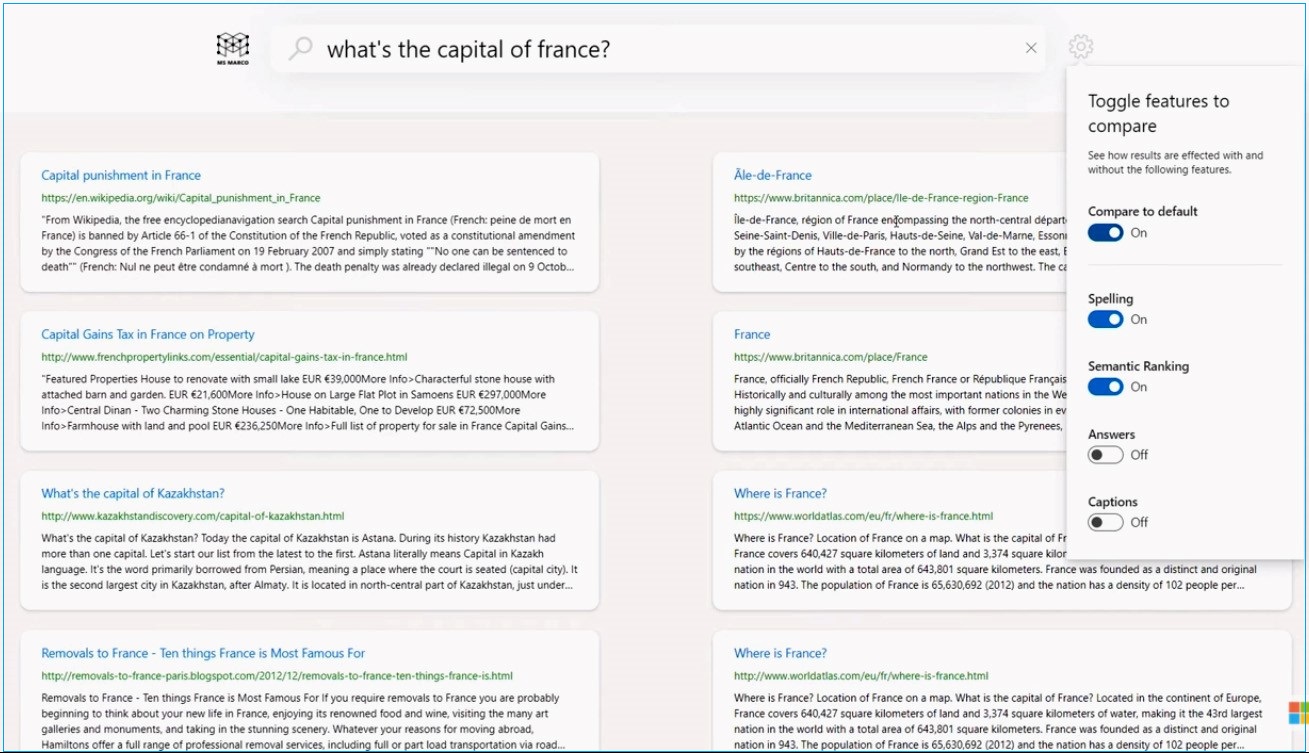

Next, enable the semantic search along with the Spelling. With semantic, Azure and Bing's teams work together to bring the state-of-the-art learned ranking models to Azure to leverage in custom search solutions. If you enable Compare to the default option, you will see the results side-by-side, keyword search on the left, and semantic search on the right.

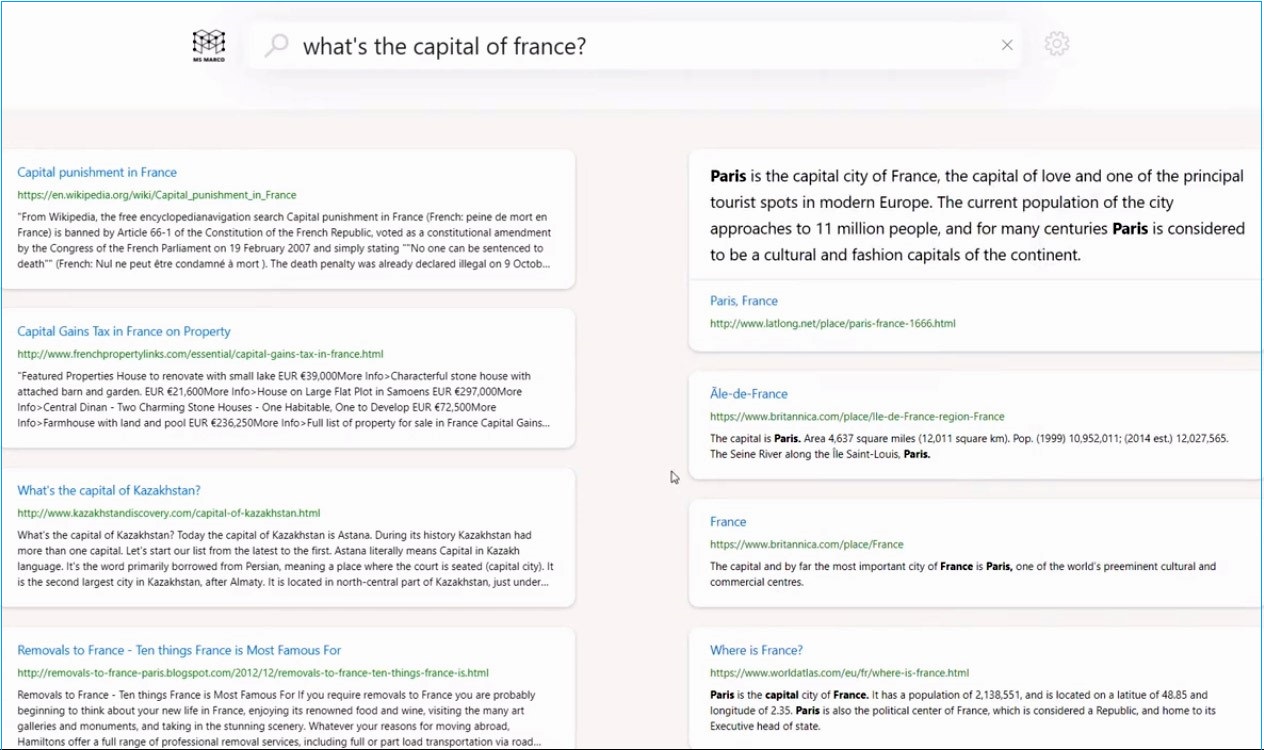

If you enable the semantic Captions and Answers options, you will see a noticeable improvement in the quality of the results. Not only do you see relevant results, but you can see captions under each result that are meaningful in the context of our query. You can also see an actual answer proposed by cognitive search on top. It allows you to create the same experiences that web search engines offer for your applications and data.

How to add semantic search into apps?

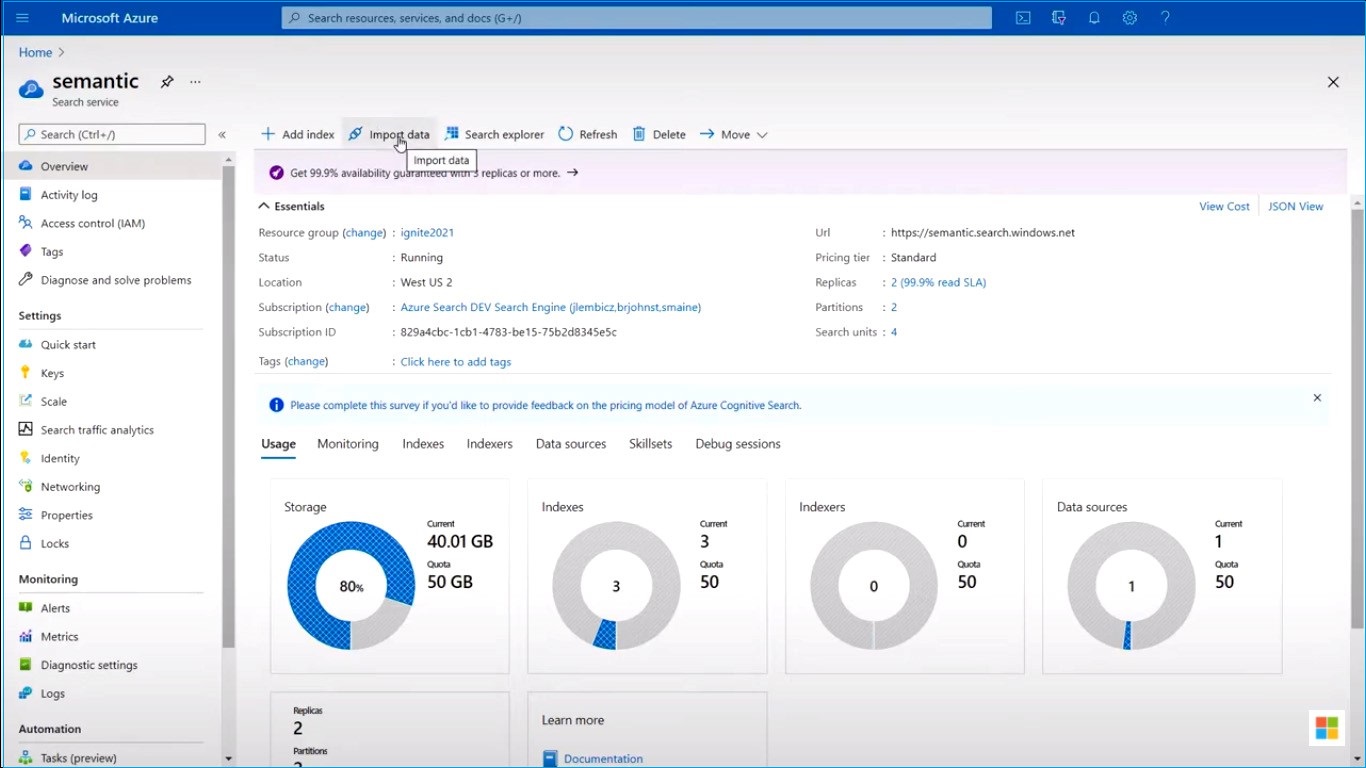

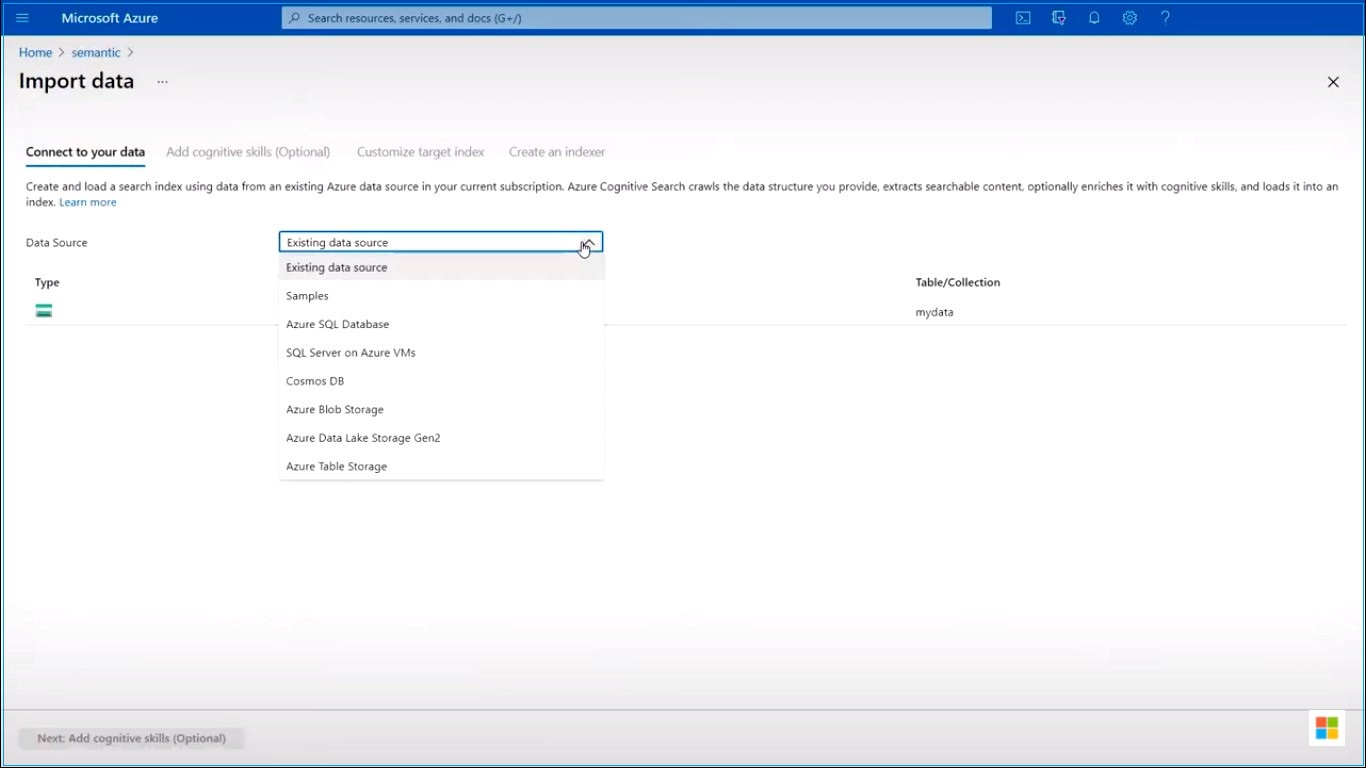

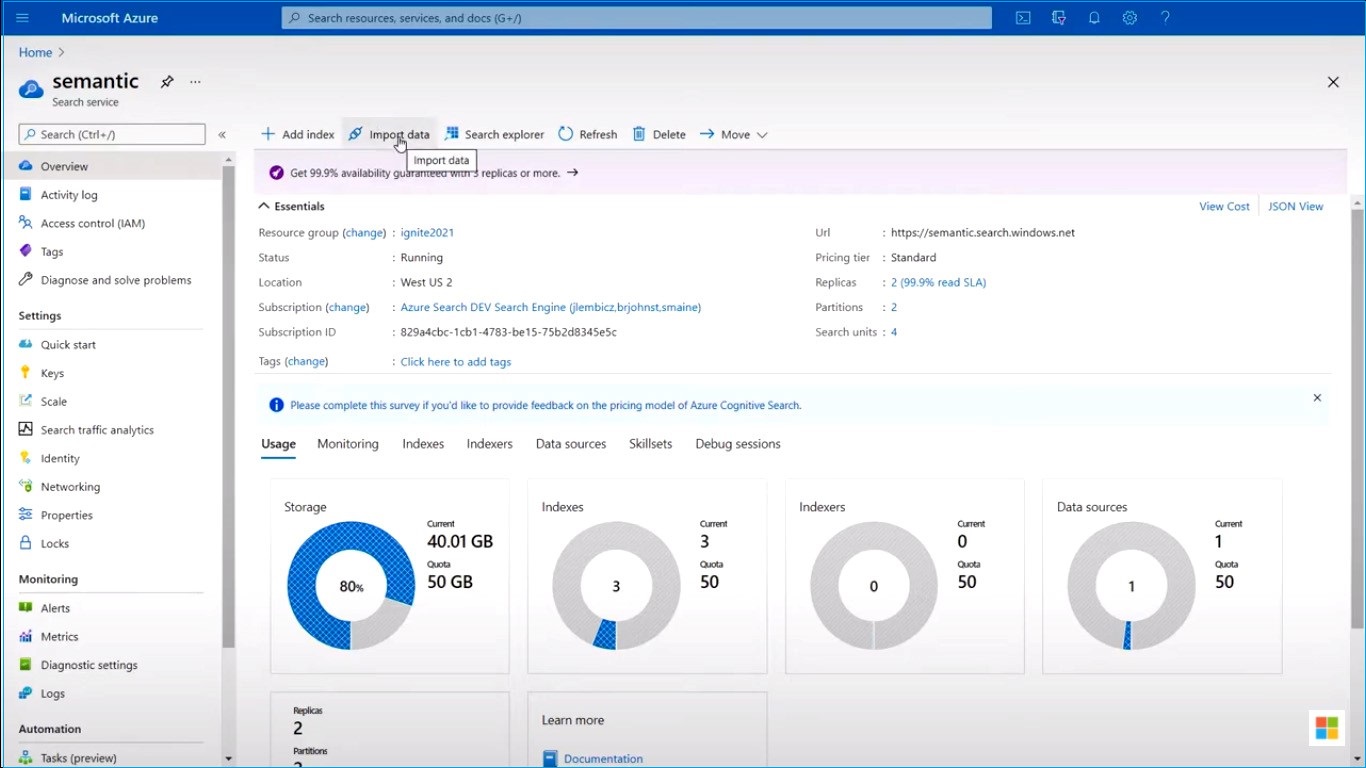

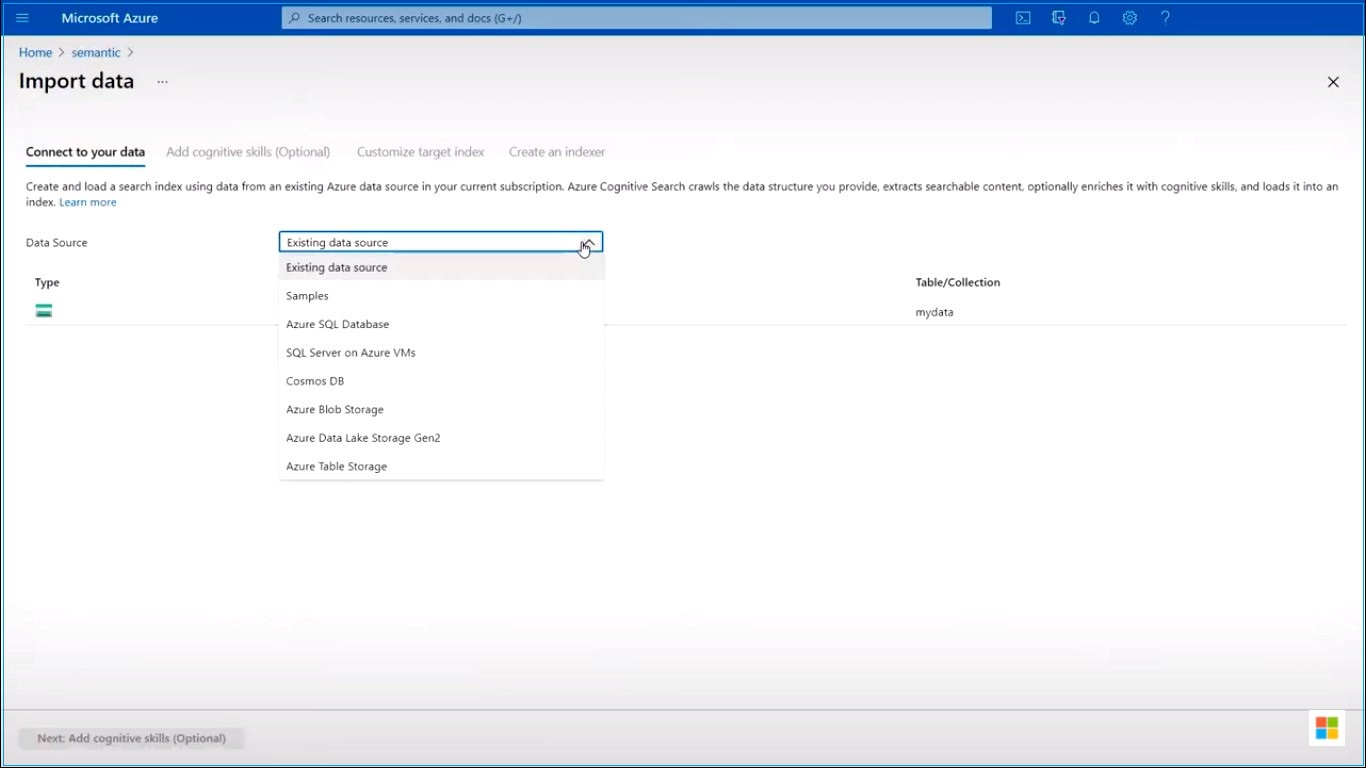

The first step requires creating a cognitive search service and importing data from existing Azure data sources.

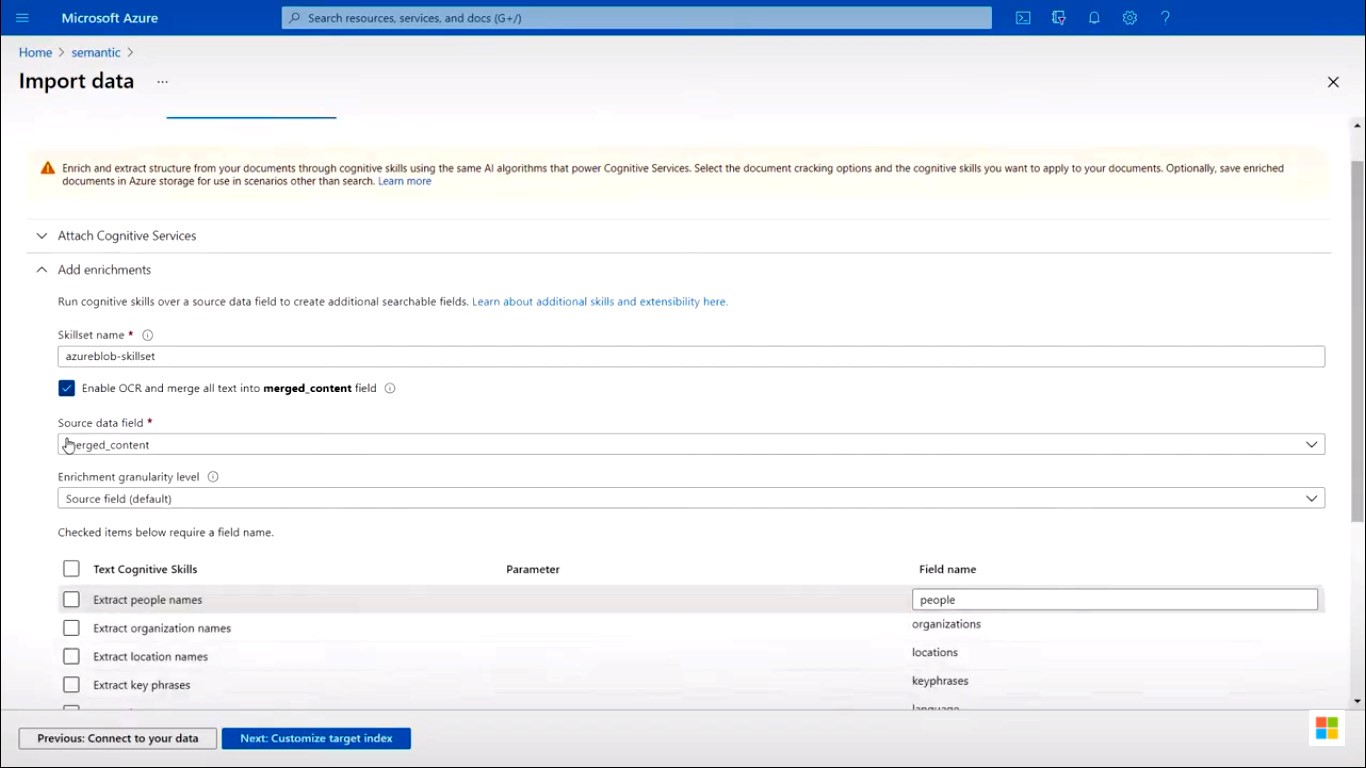

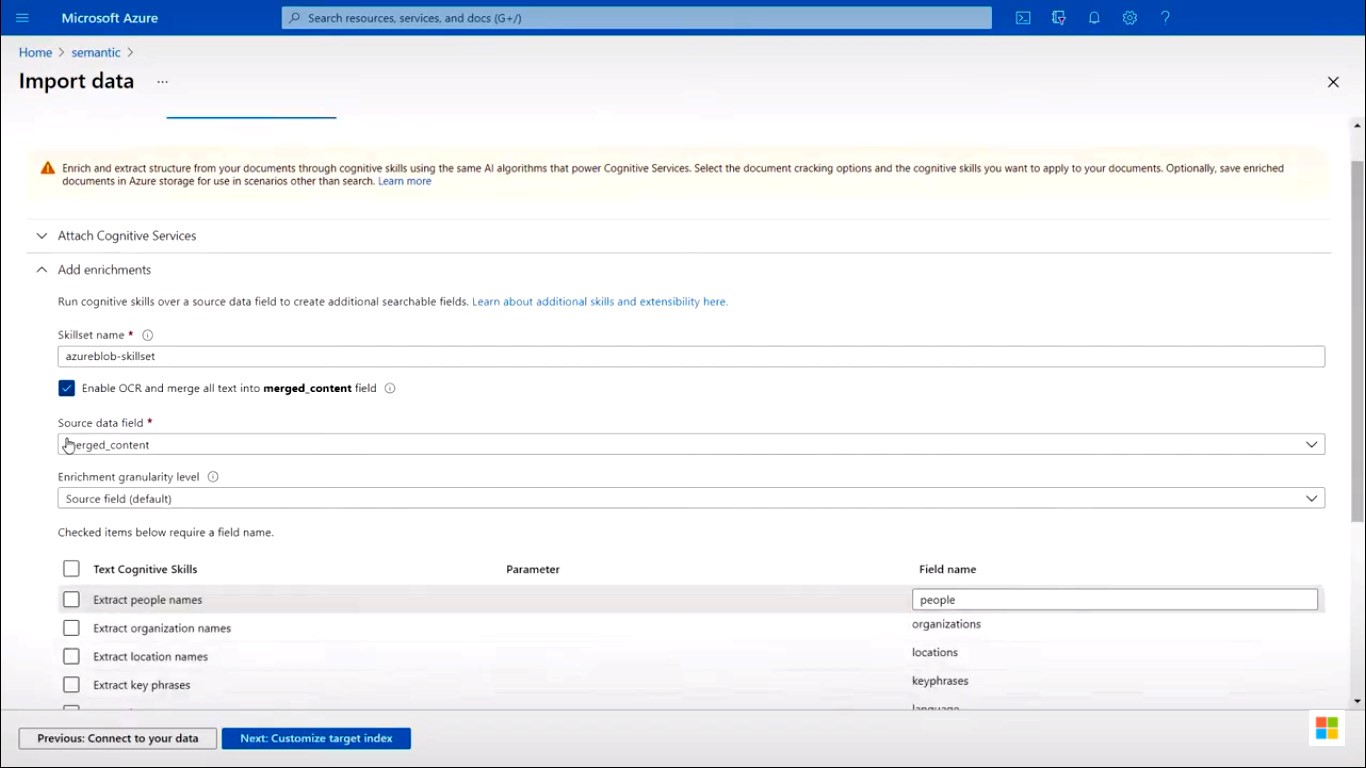

The next step enables one or more cognitive services or custom models to enrich the data that ingests. For example, you can enable optical character recognition, edit extraction, computer vision, and more when adding enrichment. Lastly, you can customize your index definition and then set up indexer options. Now, you have an ingestion process that will run automatically, detect changes, enrich data and push it into your index.

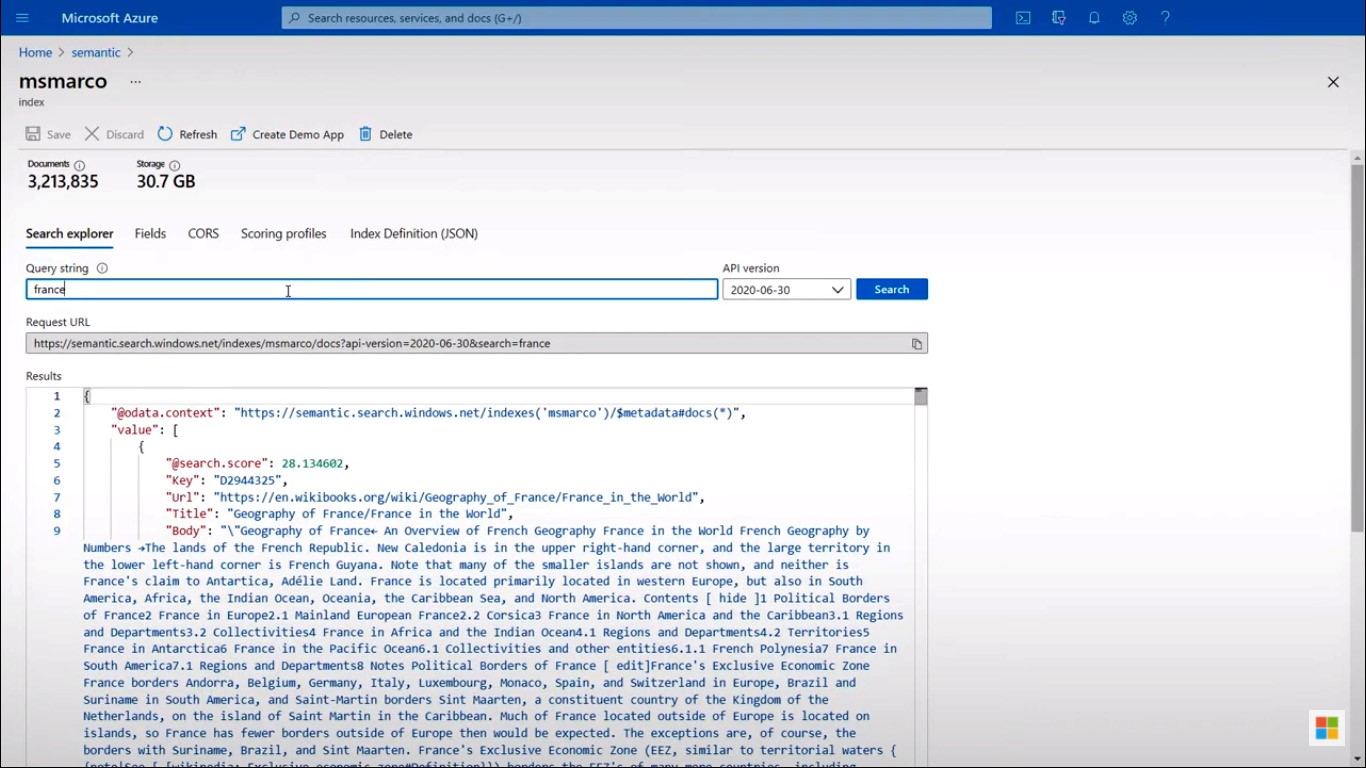

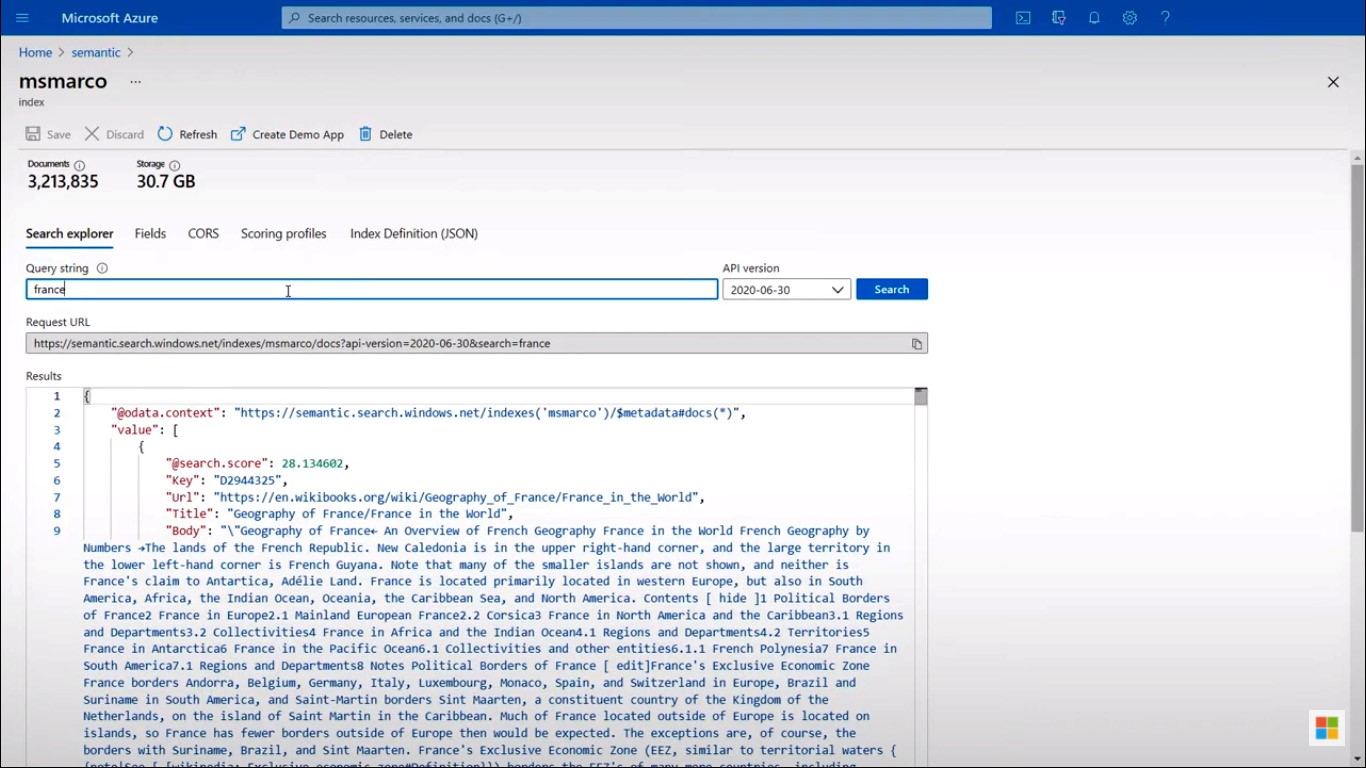

Let’s say you have already created an index. To test this, you can go into this index and search.

How does intelligence work?

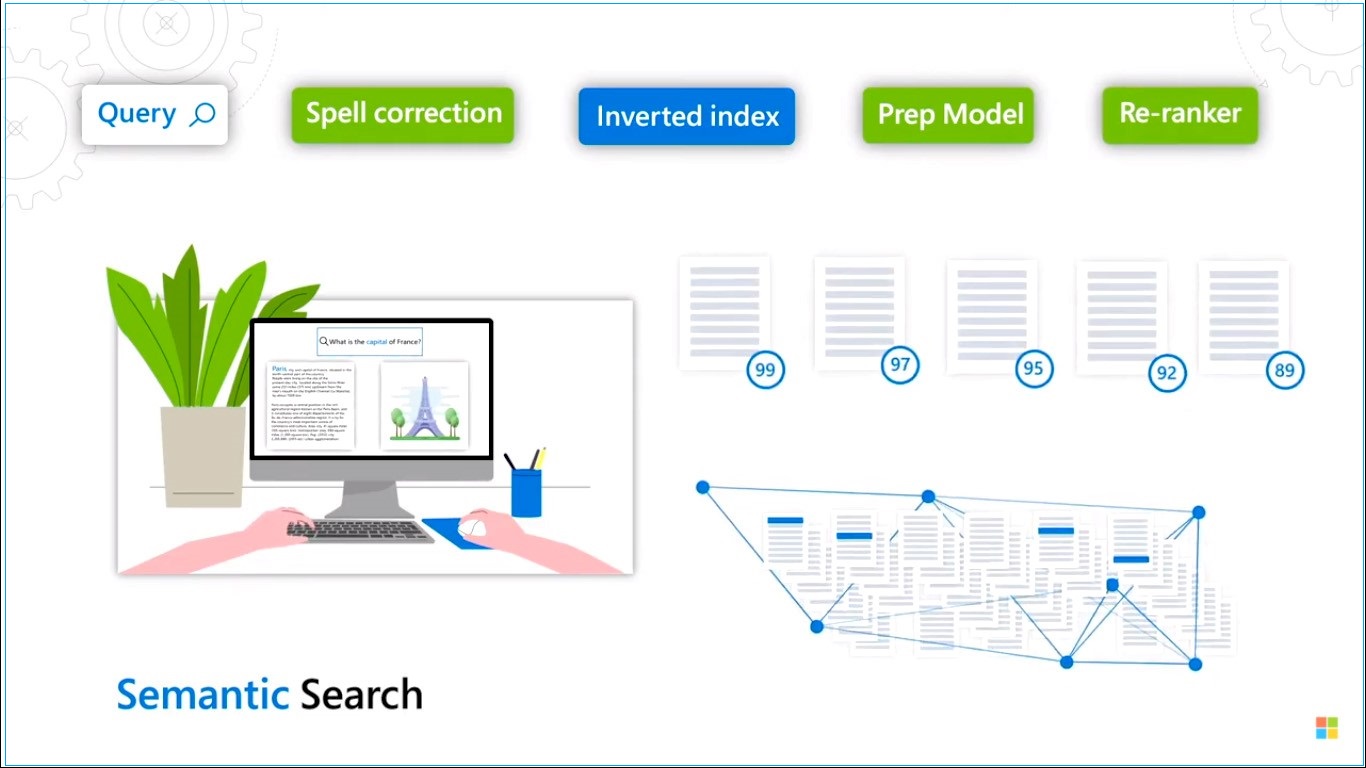

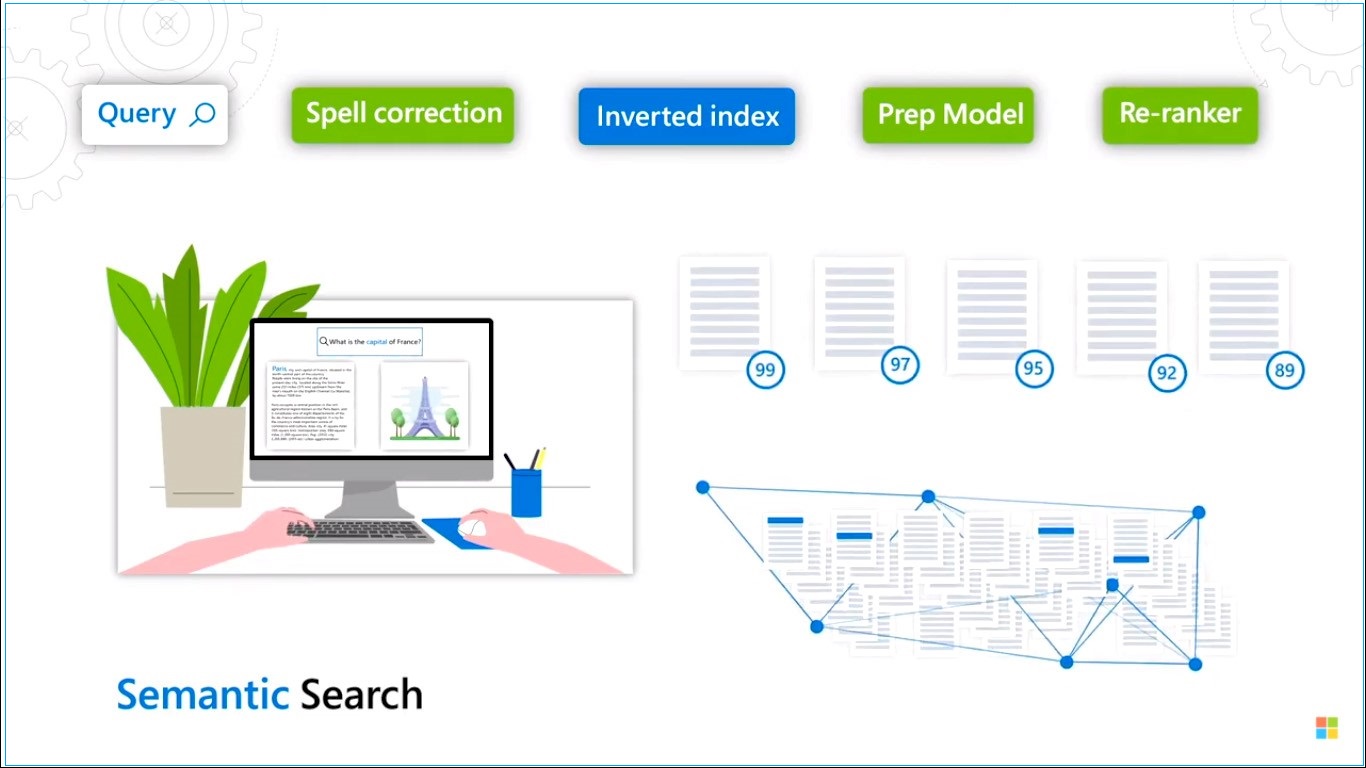

In a traditional keyword search, you would match each word in a search query against an inverted index, allowing for fast retrieval of documents based on if they contain the words of search terms you are searching for. It will return documents that have those words. But, the problem with this is, it only returns exact matches, and ranking is often only based on the record relative frequencies of the words. However, when searching through content written by people, you want to capture the nuance in the language. To do that few key components need to add to improve the search precision and recall. First, as a search query comes in, it passes through a new spelling correction service to improve document recall. Then, use the existing index to retrieve all candidates and pick the top 50 candidates using a simple scoring approach that’s fast enough for scoring millions of documents. Then, a new prep step for these search results runs another model that picks part of the document that matters the most based on the query. From there, results are re-ranked via a much more sophisticated machine learning model that scores document relevance in the context of the question.

Natural language processing and semantic search

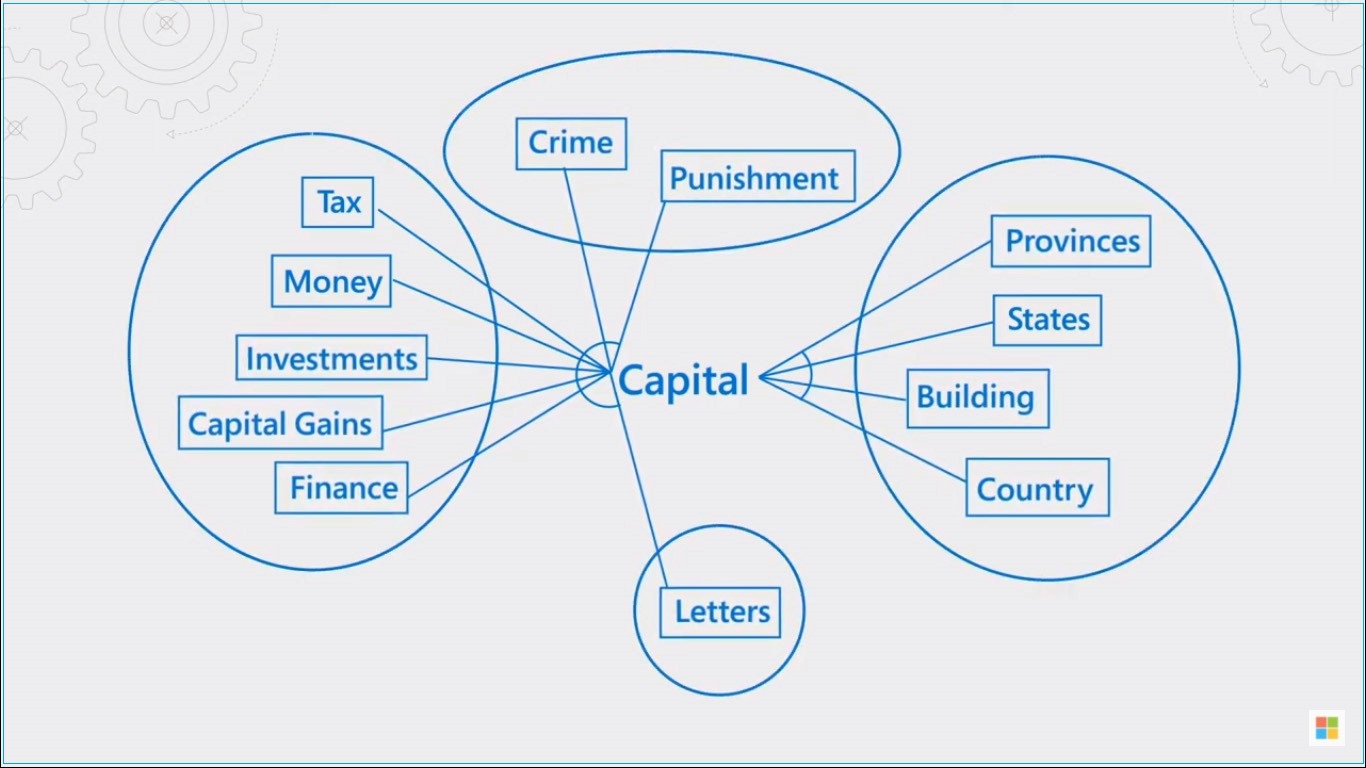

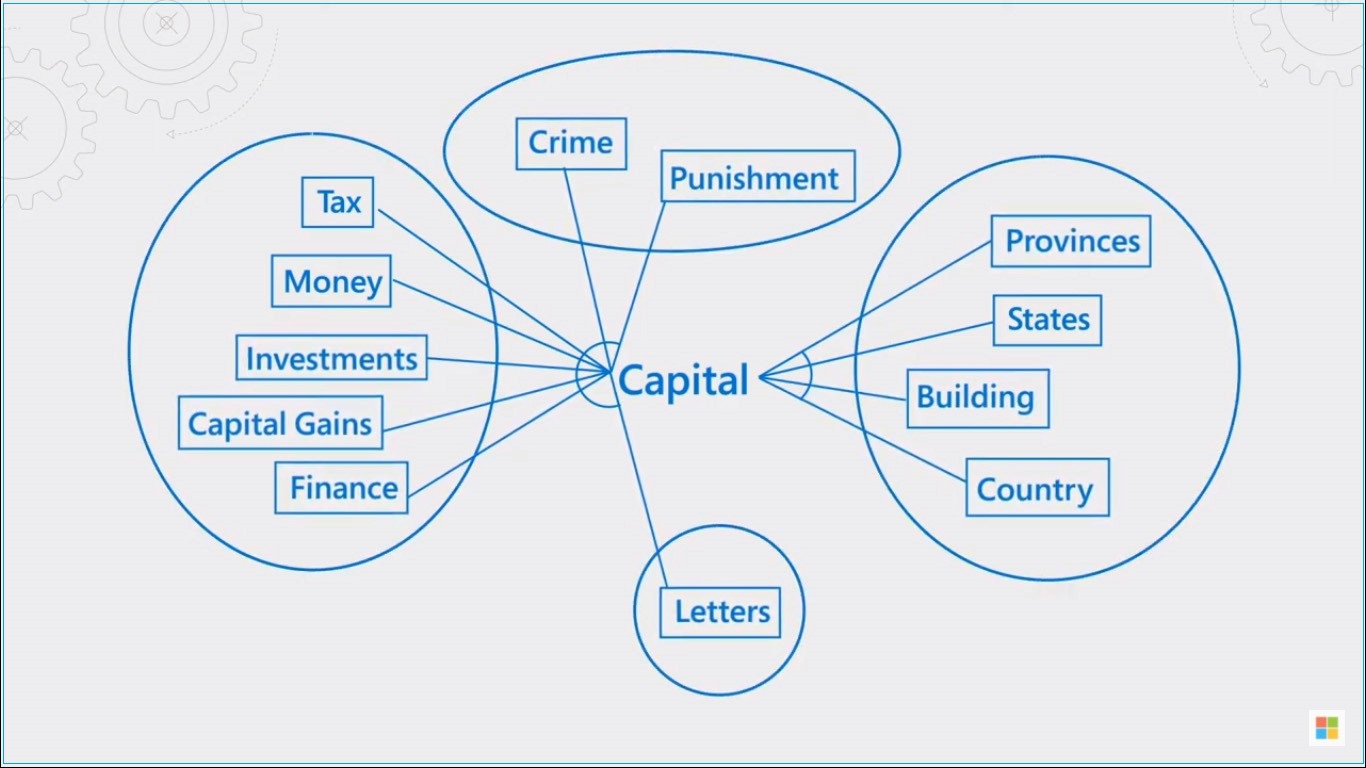

Semantic search is not only matching words. It is also able to understand the context of the terms relative to the terms surrounding them. It makes possible by the recent advancements in natural language processing. First, it needs to do improves recall to find all candidate documents. In the previous example, what’s the capital of France? A key concept was the word capital. The search engine needs to understand that the word capital could be related to states, provinces, money, finances, etc. To move beyond keyword matching, semantic search use vector representations where map words to high-dimensional vector space. These representations are learned, such as the words that represent similar concepts are close together in the same bubble of meaning. These are represented conceptual similarity, even if those words have no lexical or spelling similarity to the word capital.

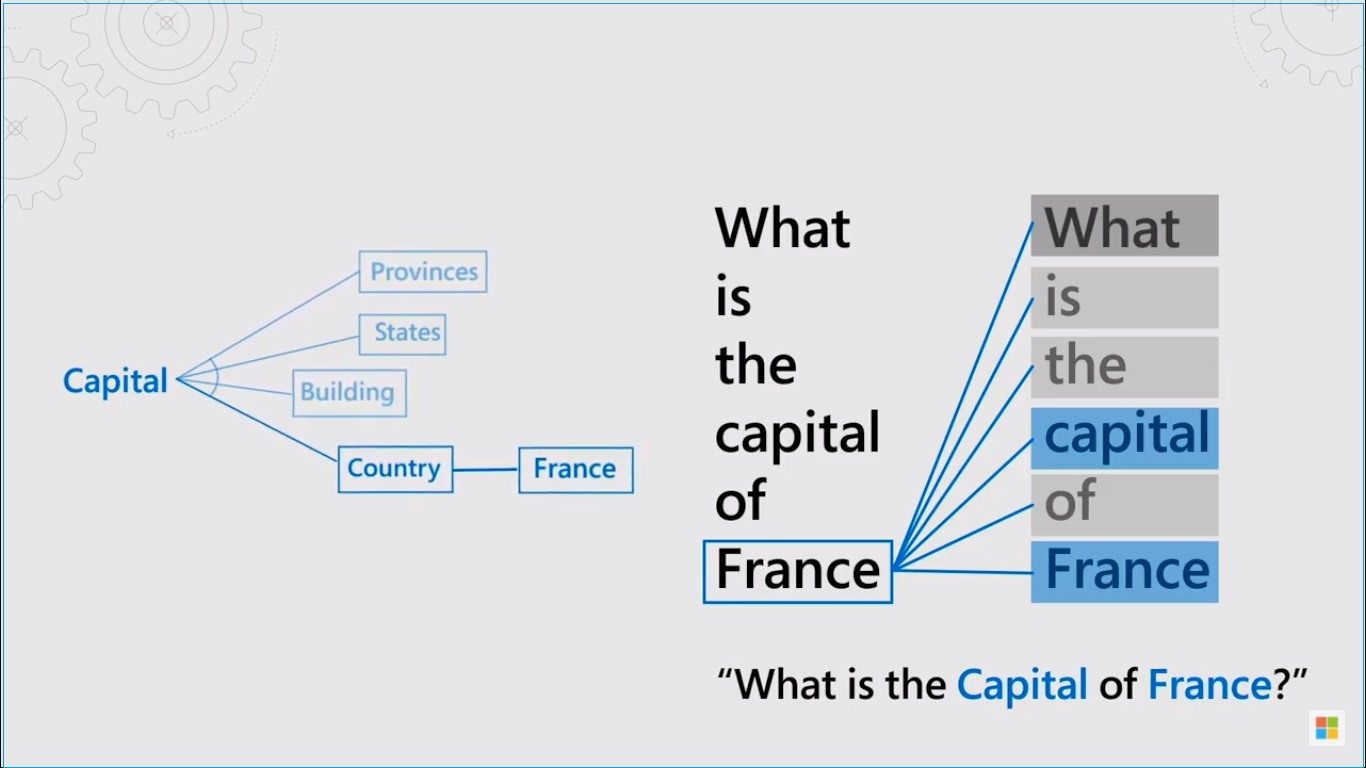

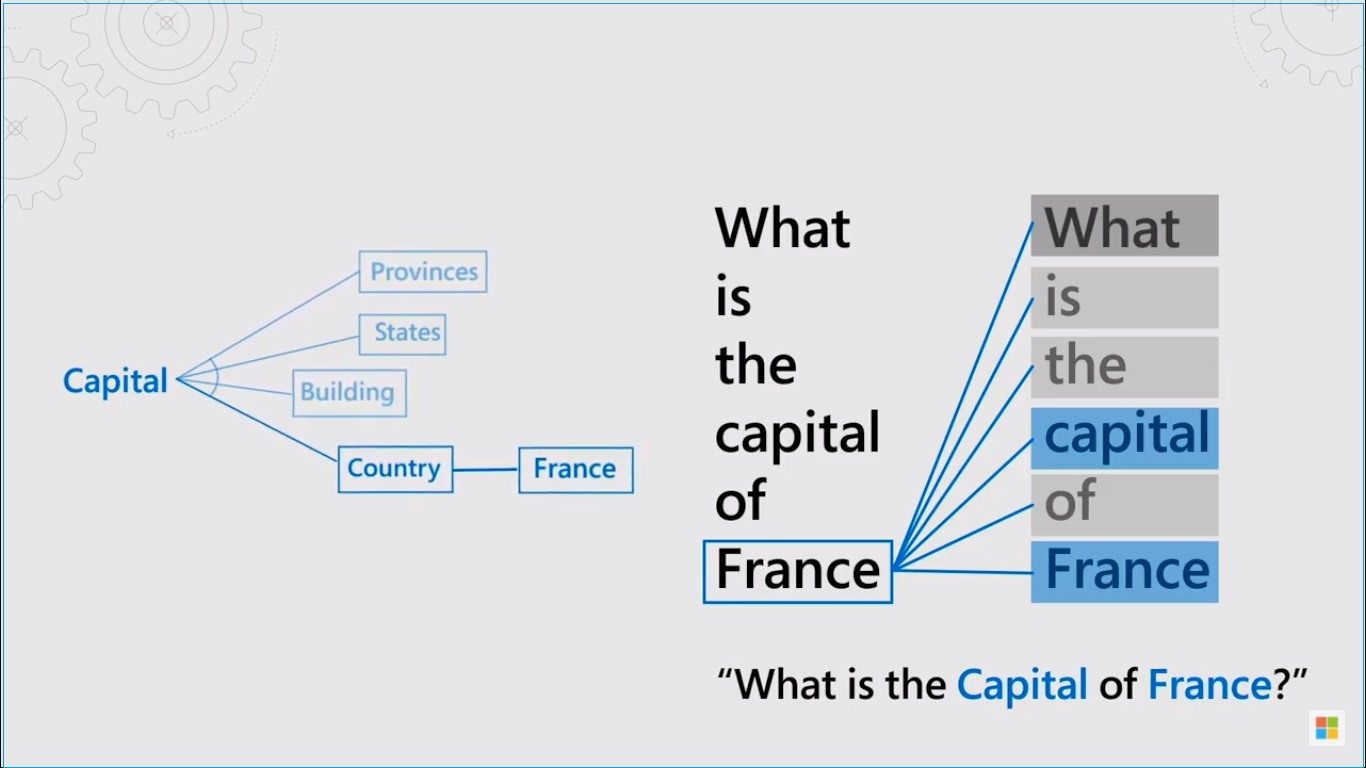

After solved for recall, it needs to solve for precision in results. The transformer, a novel neural network architecture, enables you to think about semantic similarity, not just individual words but of sentences and paragraphs. It uses an attention mechanism to understand long-range dependencies in terms in ways that were impractical before. Implementation starts with the Microsoft-developed Turing family of models that have billions of parameters. Then go through a domain specialization process where we train models to predict relevance using data from Bing. For example, when you search for the capital of France (What is the capital of France?), by reading the whole phrase, it’s able to identify the dependency between capital and France as a country that puts capital in context and quickly returns results with high confidence, in this case for Paris.

Microsoft also builds models oriented towards summarization, machine reading, and comprehension. For captions, it applies a model that can extract the most relevant text passage from a document in the context of a given query. For answers, it uses a machine-reading and comprehension model that identifies possible solutions from documents, and when it reaches a high level of confidence, it will propose an answer.

Microsoft provides all the infrastructures to run these models. But, they consume a lot of computing power and memory and also expensive to run. Therefore, to avoid slow results, it needs to right-size them and tune for performance while minimizing any loss in model quality. Further, it requires to distill and retrain the models to lower the parameter count. Then, the models can run fast enough to meet the latency requirements of a search engine. To operationalize these models, deploy them on GPUs in Azure. When a search query comes in, it can parallelize over multiple GPUs to speed up scoring operations to rank search results.

More guidance on how to get started with Azure Cognitive Search available at aka.ms/SemanticGetStarted, and you can sign up for the public review of semantic search at aka.ms/SemanticPreview.